This week’s topic on #mathchat was “What books would you recommend for mathematics and/or teachers, why?”. I offered several suggestions in Thursday’s chat, but wanted to go back and explain in more detail “why”. I’ve also added a few to help round out the selection. These books are listed in approximate order of “increasing density”, with the more casual titles at the top and the more math intensive titles near the bottom. Of course, this ordering is my own subjective opinion and should be taken with a grain of salt.

Disclaimer: The author bears no connection to any of the publications listed here, nor was the author compensated for these reviews in any way.

Lewis Carroll – “Alice’s Adventures in Wonderland”, “Through the Looking-Glass”

Recommended for: all ages, casual readers

Charles Dodgson, perhaps better known by his pen-name “Lewis Carroll”, authored a number of children’s books including the aforementioned titles. What makes these books so special, is that Dodgson was also a mathematician and embedded numerous mathematical references in these works. Most people might be familiar with the many film adaptations of these works, but I’d highly recommend reading the originals with an eye towards the logical riddles and mathematical puzzles hidden in these classics. You can find these books online at Project Gutenburg. For little a taste of the mathematics involved, you might start here.

Charles Seife – “Proofiness: The Dark Arts of Mathematical Deception”

Recommend for: teens and older, casual readers, who don’t think math is relevant to daily life

This book focuses on what I consider to be a important topic in the current socio-political climate. Ordinary people are repeated bombarded by “deceptive mathematics”. Whether the source is trying to sell a product or push a political agenda, the inclusion of numeric figures or fancy graphs can go a long way to make a claim look more legitimate than it really is. Proofiness spells out some of the common warning signs of deceitful mathematics, so that the reader can be more aware of these practices. While somewhat lighter on the mathematical content that more advanced readers might expect, I think this book sheds some much needed light on an important social issue and was an enjoyable read. If you like this, you may also like How to Lie with Statistics by Darrell Huff

Apostolos Doxiadis, Christos H. Papadimitriou, Alecos Papadatos and Annie Di Donna – Logicomix

Recommended for: casual readers, comic book fans

Technically this is a graphic novel instead of your typical book, but that doesn’t mean it doesn’t cover some important mathematics! Logicomix presents Betrand Russell as the antagonist in a series of historical events that took place in the early 20th century, culminating with Kurt Gödel’s Incompleteness Theorem. which shook the very foundation of mathematics. Logicomix makes superheroes out of mathematicians in an epic story, while exposing the reader to some amazing mathematics. Ties in nicely with Gödel, Escher, Bach below.

David Richardson – “Euler’s Gem”

Recommend for: casual readers curious about topology

I was looking for a casual introduction to topology and found this little “gem”. This is the book that I wish I read while studying topology in college! It covers everything from the basic principles of topology to the recently solved Poincaré Conjecture. Don’t let all this mathematics scare you away from this title! The book is still written in a very approachable manner. It chronicles the life history of Leonard Euler, presenting the development of the field of topology in context that even the casual reader can enjoy.

Douglass Hofstadter – “Gödel, Escher, Bach: An Eternal Golden Braid”

Recommended for: semi-casual readers with diverse interests

When someone asks me for “a good math book”, this is my go-to recommendation. This book has a little of something for everyone. Math, music, art, language, computers, biology, and psychology are woven seamlessly into a humorous and playful narrative, reminiscent of Lewis Carroll. It goes deep into mathematical concepts where appropriate, and uses visual material and metaphor to bring complex concepts down to Earth. I listed it as “semi-casual” due to the depth of mathematics involved, but a casual reader can skip some of the more math intensive parts and still get a nice overview of the general principles.

Jean-Pierre Changeux and Alain Connes – “Conversations on Mind, Matter, and Mathematics”

Recommended for: semi-casual readers, with interest in philosophy

This book spans several conversations between a Mathematician and a Neurologist on the Nature of Mathematics. One of the central questions is if mathematical ideas have an existence of their own, or if they exist only within the neurology of the human brain. Both sides present some fascinating support for their side of the argument. The material can be a little dense at times, making reference to advanced research as if it were common knowledge, and might not be appropriate for more casual readers. However, a reader willing to dig in to these arguments will reveal two very fascinating perspectives on the philosophy of mathematics.

James Gleick – “Chaos: Making a New Science”

Recommended for: semi-casual readers, preferably with some Calculus experience

Chaos takes the reader on a historical journey through the emergence of Chaos Theory as a mathematical field. An amazing journey through the work of numerous mathematicians in different fields, who came upon systems exhibiting “sensitive dependence on initial conditions”. This book serves as an introduction to both Chaos Theory and non-linear dynamics, while shedding light on the process behind the development of this field. Some experience with differential equations would be beneficial to the reader, but more casual readers can get by with assistance of wonderful visual aids. A nice complement to A New Kind of Science below.

Roger Penrose – “The Emperor’s New Mind: Concerning Computers, Minds, and the Laws of Physics”

Recommended for: more advanced readers with interests in physics and artificial intelligence

Roger Penrose is a well established mathematical physicist, and The Emperor’s New Mind offers an accurate and well written overview of quantum physics. However, what makes this book interesting is that Penrose takes this physics and mathematics to mount an attack on what Artificial Intelligence researchers describe as “strong AI”. Penrose makes the case that Gödel’s Incompleteness Theorem implies that cognitive psychology’s information processing model is inherently flawed — that the human mind can not be realistically modeled by a computer. Whether you agree with Penrose’s conclusions or not, his argument is insightful and is something that needs to be addressed as the field of cognitive psychology moves forward.

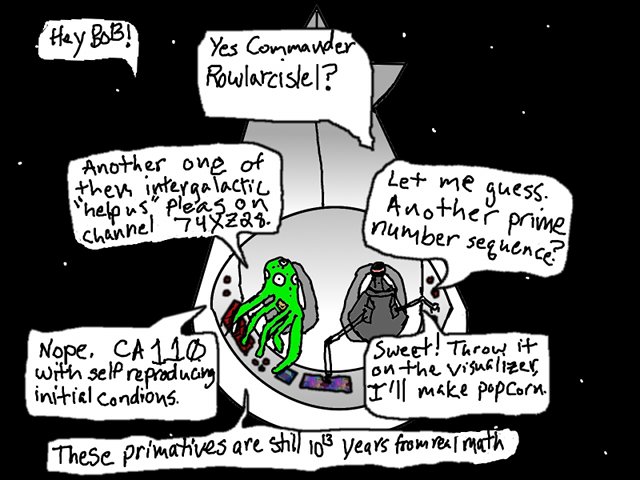

Stephen Wolfram – “A New Kind of Science”

Recommended for: more advanced readers with interest in computer science

Don’t let its size intimidate you. If you made it through the titles above, than you should be ready to make headway into this giant tome. The central theme of A New Kind of Science is that complex phenomena can emerge from simple systems of rules. This is different from Chaos Theory (described above), in that this complexity can emerge regardless of initial conditions. A New Kind of Science takes the stance that we can learn a great deal about mathematics through experimentation, and makes the case that perhaps the vast complexity of the universe around us can be explained by a few simple rules.

![Rendered by QuickLaTeX.com \[\frac{1}{1+\sqrt[3]{2}}\cdot\frac{1-\sqrt[3]{2}+\sqrt[3]{2}^{2}}{1-\sqrt[3]{2}+\sqrt[3]{2}^{2}} = \frac{1-\sqrt[3]{2}+\sqrt[3]{2}^{2}}{1+\sqrt[3]{2}^{3}} = \frac{1-\sqrt[3]{2}+\sqrt[3]{2}^{2}}{3}\]](https://suburbanlion.com/wp-content/ql-cache/quicklatex.com-f4820e1ae425c7c75b874f4cf303c55b_l3.png)